Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join our daily and weekly newsletters for the latest updates and exclusive content in the industry’s leading AI coverage. Learn more

The distant horizontal is always confused, darkness is dark with accurate distance and atmosphere fog. Therefore, the forecasting of the future is projected: We cannot clearly see the contours of shapes and events before us. Instead we get educated guessers.

The newly published AI 2027 The scenario prepared by the EU researchers and scouts, was developed by forecasts with the experience of the institutions Open The center of the AI policy offers 2 to 3 years for the future, which includes special technical stages. He speaks very clearly about the Aii in the near future.

It was informed with extensive expert opinion and scenario planning training, AI 2027, a quarter of multimodal models, especially the prisoner, which gained the expected grounding and autonomy. This forecast is the reliability of contributors that are particularly noteworthy, both specificity, specificity, both specificity and current research pipelines.

The most notable forecast is that Artificial general exploration (AGI) will be obtained in 2027 and will follow the artificial supervent (ASI) months later. AGI exceeds or exceeds human opportunities between scientific research, adaptation, and self-developing and self-developing and self-developing and self-developing and self-evolving. ASIA increases the systems that represent systems that sharpen the human intelligence with the ability to solve problems, even the ability to understand.

As many predictions, these are based on assumptions, at least this, the AI models and applications will continue to develop exponentially for the last few years. Thus, this is expedient, but exponential progress is not guaranteed, especially the scale of these models does not turn into a decrease.

Not everyone agrees with these forecasts. Ali Farhadi said the General Director of the Allen Institute of Artificial Intelligence New York Times: “We all for forecasts and forecasts, but this [AI 2027] The reality of how the work in the forecast, scientific evidence or EU is not visible. ”

But there are others who can see this evolution accordingly. Anthropic co-founder Jack Clark wrote with him You have a import The newsletter is AI 2027: “The best treatment, ‘The best treatment of’ as ‘living in exponential’.” Added that this “Technical narrative of the next few years of AI development. “This schedule also adapts with the anththropic CEO Dario Amodani, who said that the EU could be up to the last two to three years, which the EU could glide in almost everything. And Google said deprmind A new research paper This AGI may be appropriate until 2030.

It seems like a convenient time. There are many similar moments in history, including the invention of the printing house or the spread of electricity. However, these improvements must have an important impact for many years and decades.

This AGI arrival If it is especially inevitable, it feels different and potentially frightening. AI 2027 describes a scenario due to the fact that human values are wrong, and the superitive is destroying AI humanity. If they are right, the most suitable risk for mankind can now be on the horizon of the same planning as the next smartphone update. In turn, Google Deepmind notes the paper notes that the lack of human leave is the possible result from AGI.

Reviews vary slowly until people are presented with excessive evidence. This is a Takeaway from Tomas Kuhn’s only job “Structure of scientific revolutions“Kuhn reminds me of the world’s overnight, suddenly, suddenly slipping. And with AI, this sliding can continue.

Before his appearance Great language models (LLMS) and Chatgpt, Median Graphic Project for AGI was longer than today. The consensus between experts and forecast markets, the media of the AGI on 2058 has set the expected arrival. thought Was “30 to 50 years or even longer.” However, the progress shown by the LLMS caused him to change his mind and said Can come up to 2028.

If Agi has come over the next few years and is watched by ASI, there are many influences for mankind. Write FortuneJeremy Kahn, if AGI came in the next few years, it may really cause great work loss, because many organizations are in a hurry to automate roles. ”

The two-year AGI run offers an insufficient discount period for the adaptation of individual and enterprises. Industrial service, such as customer service, programming and data analysis, can face a dramatic rise until the scale of infrastructure. This pressure will be intensified if only decay During this period, companies are already occurring when they want to reduce salary costs and supply staff with automation.

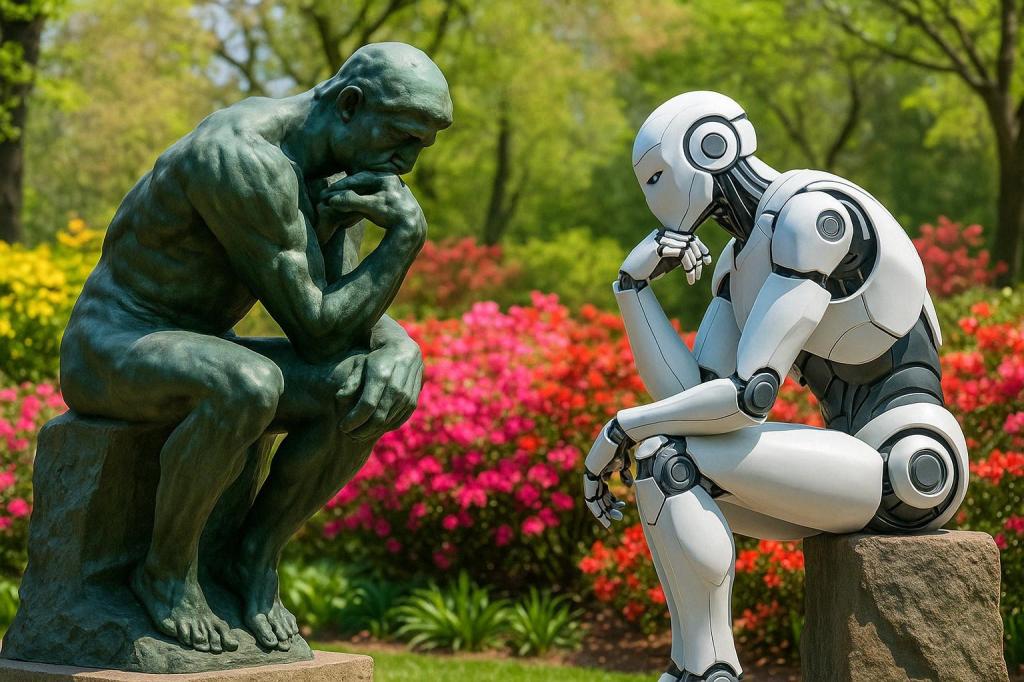

Even if AGI has extensive work loss or species disappear, there are other serious explanations. Since the age of the reason, it was justified in a belief that we think because we thought of human being.

It has deep philosophical roots that determine our existence of this trust. Now the famous statement was René decartments, who wrote in 1637: “Je Pense, DONC JE Suis” (“I think” I think “). Then they translated him into Latin: “I think I am“ Thus, he suggested that confidence could be found in an individual act. He has been deceived by his feelings or proved to be the truth that others thinks, if others are wrong.

From this point of view, itself anchored in feet. At the same time, it was a revolutionary idea and gave unknown humanism, scientific method and eventually modern democracy and individual rights. There were central figures in the modern world as a thinker.

He evokes an intense question: if the cars can now think or think and think about our thinking to AI, what does it mean for its modern concept? One Last Research reported by 404 Media This explores the conundrum. When people trust in the generative AI for the work, they are engaged in less critical thinking, “he said,” he said, “he can result in the worsening of cognitive faculties.

Aggi must fight fast in the next few years or soon – not only for jobs and security, but for who we are. We must do so when accepting the extraordinary potential to accelerate the discovery, reduce suffering and expand human abilities. For example, Amodii, “Strong AI”, said that 100 years of biological research and its benefits, including improved health, will be compressed in 5-10 years.

Predictions submitted in AI 2027 may not be correct or are correct and provocative. And this sensitivity should be enough. As members of agency and companies, governments and societies, we must act now to prepare for what can happen.

For enterprises, it means investing in the development of technical EU security research and organizational work, creating a role in combining AI opportunities while strengthening human strengths. For governments, both immediate concerns and model evaluation and model assessment and long-term existing risks require acceleration of regulatory frames. For individuals, this means accepting creative, emotional intelligence and complex judgment, including emotional exploration and complex judgment, including emotional intelligence and complex judgment, which develops healthy work ties with the AI instruments that do not reduce our agency.

The timing of the abstract dispute on distant futures passed; Specific preparations need urgently for the near-term transformation. Our future will not be written only by algorithms. This will be formed by the options we have done and the values starting today.

Gary Grossman, EU Technology Experience Sketch and the global leadership of Edelman AI perfection center.