Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

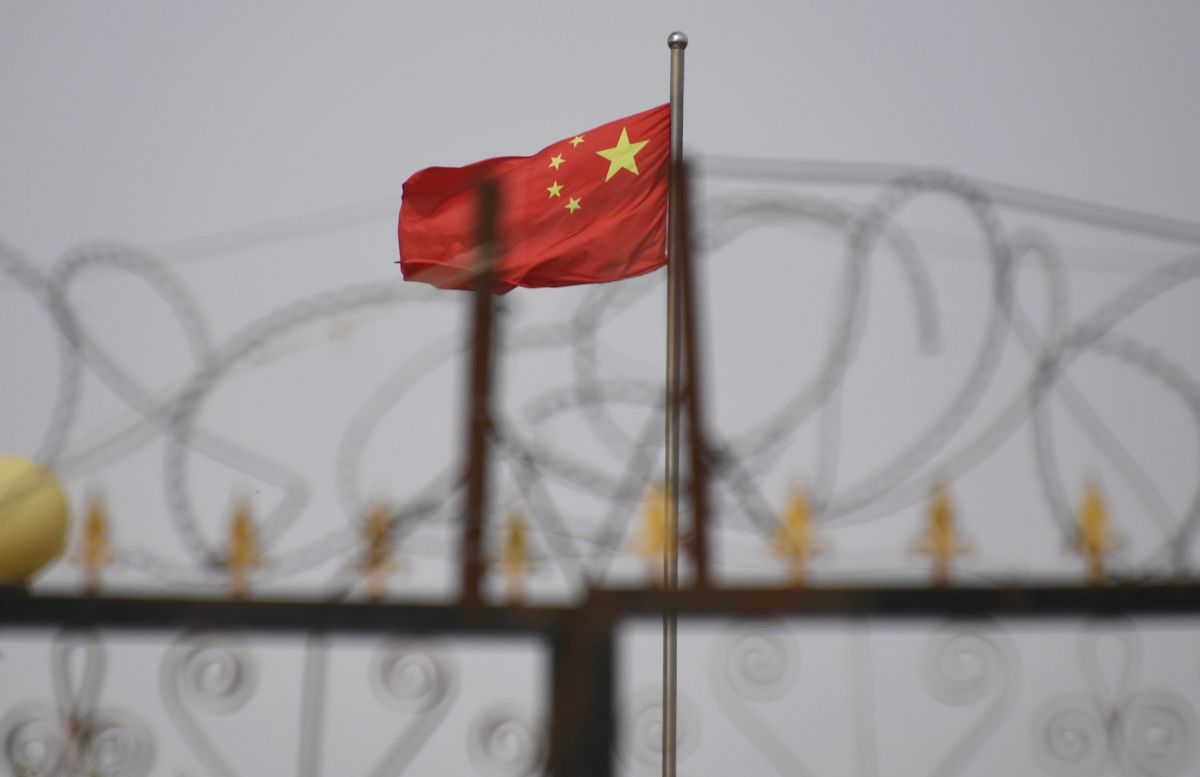

The complaint with poverty in China’s village. An news report about the corrupt communist party member. A scream for help with cops that shook entrepreneurs.

These are just a few of the 133,000 samples that are fed to a large language model of complex complex, which is a complex large language model designed to automatically flag the Chinese government.

A database seen by TechCrunch, China, Tiananmen Square, the massacre of traditional taboos, outside traditional taboos, shows that a super-loading AI system is developing a super-loading AI system.

The system is primarily aimed at censoring Chinese citizens online, but can be used for other purposes such as the improvement of Chinese AI models’ already censored.

Xiao Qiang, TechCrunch, Chinese government or its branches in UC Berkeley, who investigating the Chinese censor and database, said they want to use LLMs to improve the repression of the Chinese government or its branches.

“Unlike the traditional censorship mechanisms, which rely on human labor for keyword-based filters and hand review, such instructions will significantly improve the efficiency of state-run data and their mothers,” said Qiang Techcrunch.

This adds to the growing evidence that authoritarian regimes quickly adopt the latest AI technology. In February, for example, Openai said He caught the presence of more than one Chinese presence using the LLMS to watch anti-government writings and staining Chinese opposition.

The Chinese Embassy in Washington, DC, TechCrunch In the statement “Unreasonable attacks and slander against China,” China attaches great importance to the development of ethics AI.

The database was found Security researcher netaskariAfter the Baidu server, the unsecured elasticarch database, after being stored in the unsecured elasticarch database, shared an example of a sample sharing with TechCrunch.

This does not show any participation in any company – all sorts of organizations store information with these providers.

Whose incoming is not the indicator, but notes, but the last entrances, the latest entrances, show their acquaintances since December 2024.

In the language, the people of people reminded how the system showed the creator of the system An indiscreet LLM not disclosed to understand tasks If a piece of content has something to do with political, social life and military-related sensitive themes. This type of content is considered “the highest priority” and should be immediately flagged.

The most priority topics include pollution and food security scandals, financial fraud and labor disputes, sometimes hot key problems and labor disputes causing people’s protests – for example, Shifang protests 2012.

Any “political satire” form is clearly targeted. For example, if someone uses historical analogies to make a point related to “current political figures”, which should be marked immediately, there should be something to do with “Taiwan Policy”. It is widely targeted, including information about military issues, military actions, exercises and weapons.

A piece of database may appear below. The code in the inside is referring to Tokens and LLMS that confirms that the system uses an AI model to perform the offer:

LLM censorship, which is 133,000 samples of this giant, was collected in TechCrunch 10 Representation piece of content.

Topics are likely to wake up social disorders are a recurring topic. For example, a part, for example, is a post by a business owner who complies with local police officers. A matter of rising in China struggling with its economy.

Another piece of content in China is in the poverty of the village, which explains only the children of older people and children. The Chinese Communist Party (CCP), a local officer has a news report to convince the “superstitions” instead of severe corruption and Marxism.

There are extensive material related to Taiwanese and military issues, such as the details of the Taiwan’s military capabilities and a new Chinese jet fighter. The Chinese word for Taiwan (台湾) is recorded by TechCrunch shows, more than 15,000 times in the data.

The subtle opposition also seems to be a target. A piece of database is a joke about the fleeing nature of the power that uses the idiom of popular Chinese idiom, “the monkeys were scattered when the tree fell.”

Thanks to the authoritarian political system, China is especially woven.

There is no information about the creators for data. However, it is designed for “public opinion work” designed for “public opinion works”, which is designed to serve the goals of China, a specialist told TechCrunch.

Michael Caster, Legal Organization’s Asian Program Manager Article 19 Article 19 “Public Opinion Works”, China (CAC) cyber venue (CAC), usually refers to censorship and propaganda efforts.

The last goal is to protect the narrations of the Chinese government online, and any alternative meeting is being cleaned. Chinese President Xi Jinping He described himself CCP’s “Public opinion works” internet as “frontline”.

The database investigated by Techcrunch is the latest evidence that authoritarian governments are trying to use AI for repressive purposes.

Open Left a report last month An unknown actor used in China probably used the generative EU to monitor social media conversations, especially towards human rights human rights and towards the Chinese government.

If you know how the AI is used in government acceleration, Charlesrollet can contact Charles Rollet securely from the signal. Secretary.

OpenaI also found the technology used to create highly critical reviews of the prominent Chinese dissident, Cai XIA.

Traditionally, China’s censorship methods automatically rely on the basic algorithms that automatically block the content that marks blacklisted blacklist such as “Tiananmen massacre” or “Xi Jinpre” Many users lived using DeepSeek for the first time.

However, a new AI Tech like LLMS can censorously censor in a wide range of delicate criticism. Some AI systems can continue to improve because they browse more information.

“I think TechCrunch at a time,” Xiao, Berkeley researcher “, where the AI developed censorship developed, especially Deepseek.

[ad_2]

Source link