Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

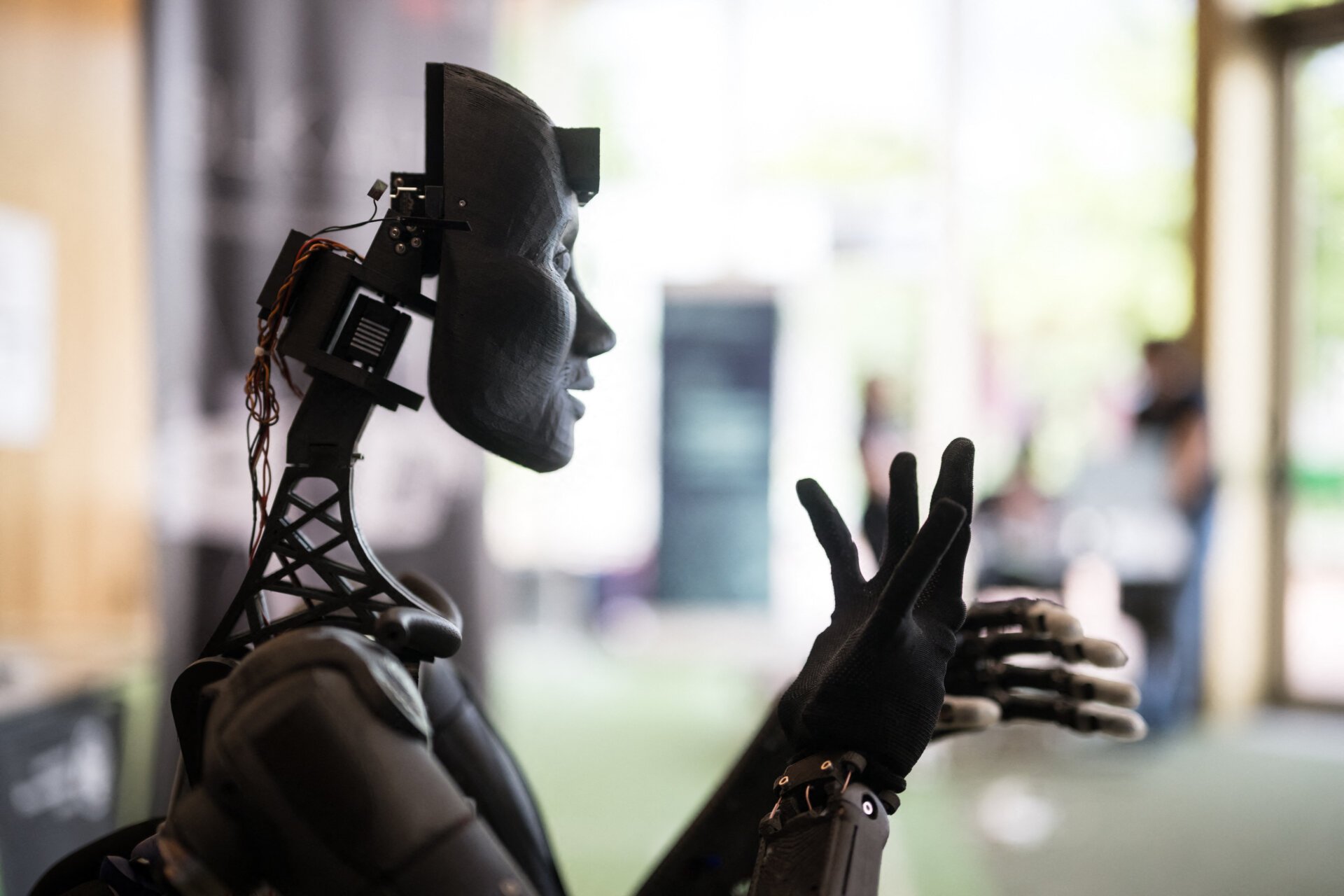

Drones who provide medical assistance to digital assistants who perform daily tasks are increasingly increased in everyday life. The creators of these innovations were transformative. For some people, the main applications like Chatgept and Claude may seem like a spell. However, these systems are not magical, nor are they are not foolish – they are unable to work as regularly.

AI systems can be a failure due to technical design defects or biased training information. They can suffer from vulnerabilities in the codes that can be exploited by harmful hackers. It is important to correct a system to isolate the cause of AI failure.

The usual systems are usually opaque, even to their creators. The challenge is to explore how the AI system fails or after falling victim to attack. There are methods for checking AI systems, but require access to the internal data of the AI system. These entrances are not guaranteed to determine the reason for the failure of the owner of the Owner AI system.

We Computer scientists Who reads Digital Court Process. Our team set up a system at the Georgian Institute, AI PsychiatryOr AIP, this can re-create the scenario that an EU fails to determine what the EU error is. The system appeals to the difficulties of the AI court process to restore the AI model and “intention”, so it can be systematically tested.

Imagine that a car via a car for a reason that is easily visible, and then crashes. The logs and sensor information may suggest that an incorrect camera causes a misunderstanding like a command to burn AI’s road sign. After a mission-critical failure Autonomous vehicle accidentInvestigators must clearly determine what causes the mistake.

Did the accident caused a harmful attack on AI? In this hypothetical situation, the camera error can be the result of security vulnerabilities or mistakes in the software operated by a hacker. If investigators find such a sensitivity, they must determine whether there is a crash. But it is not a small feat to make this determination.

Although drones, autonomous vehicles and other so-called cyber-physical systems failures to restore some evidence, it cannot seize the tips required to complete the AI in this system. Advanced AIS can even Update their decision making – And as a result, the tips – continuously, it makes it impossible to explore the most pressing models in existing methods.

https://www.youtube.com/watch?v=pcfxjfypdge

AI psychiatry applies a number of court algorithms to isolate the data behind the AI system decision. After that, these pieces are re-collected with a functional model that performs the same way in the original model. Investigators can test the AI with malicious entries to see “revive” and demonstrate harmful or secret behavior in a controlled environment.

AI Psychiatric leads as an introduction A memory imageWhen AI is operational, an image of bit and bytes loaded. During the accident in the autonomous vehicle scenario, a memory image retains important tips on EU’s internal state and decision-making processes. With AI psychiatry, investigators can now release the exact AI model, break the bits and bytes, and cancel the model to a reliable environment to test the model.

Our team tested the AI psychiatric in 30 AI, and 24 of them were deliberately “retracted“Extract incorrect results under special triggers. The system manages and tested each model, including models used in real world scenarios, such as autonomous vehicles.

So far, our tests show that the AI psychiatric can effectively solve the digital secret behind a failure such as an autonomous car accident that will leave more questions than previously answered. If the car does not find a weakness in the EU system, AI psychiatry allows investigators to exclude AI and seek other reasons such as the wrong camera.

The main algorithm of AI psychiatry is general: focuses on universal components where all AI models have to decide. This is preparing to approach our approach for any AI models that use popular AI development frames. Anyone working to explore the possible AI failure can use our system to evaluate a model without knowing accurate architecture.

AI is a system that leads product recommendations or autonomous drone frozen, AI psychiatry can be restored and restore for analyzing AI. AI Psychiatry Completely open source For use for any investigator.

AI psychiatry can also serve as a valuable tool to conduct inspections in the AI systems before arrears. AI audits with government agencies with child protection services, which integrate AI systems from law enforcement agencies, are becoming increasingly general control requirements at the state level. With a tool like AI psychiatry, auditors can apply a consistent court methodology along various AI platforms and placements.

In a long run, this will pay meaningful dividends for the creators of both AI systems and everyone affected by their responsibilities.![]()

David OygenbyDoctor of Philosophy Electrical and Computer Engineering, Georgian Institute of Technology and Brendan SaltformaggioCybermination and Privacy and Computer and Computer Engineering Associate Professor, Georgian Institute of Technology

This article is republished Conversation Under the Creative Commons license. Read original article.

[ad_2]

Source link