Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

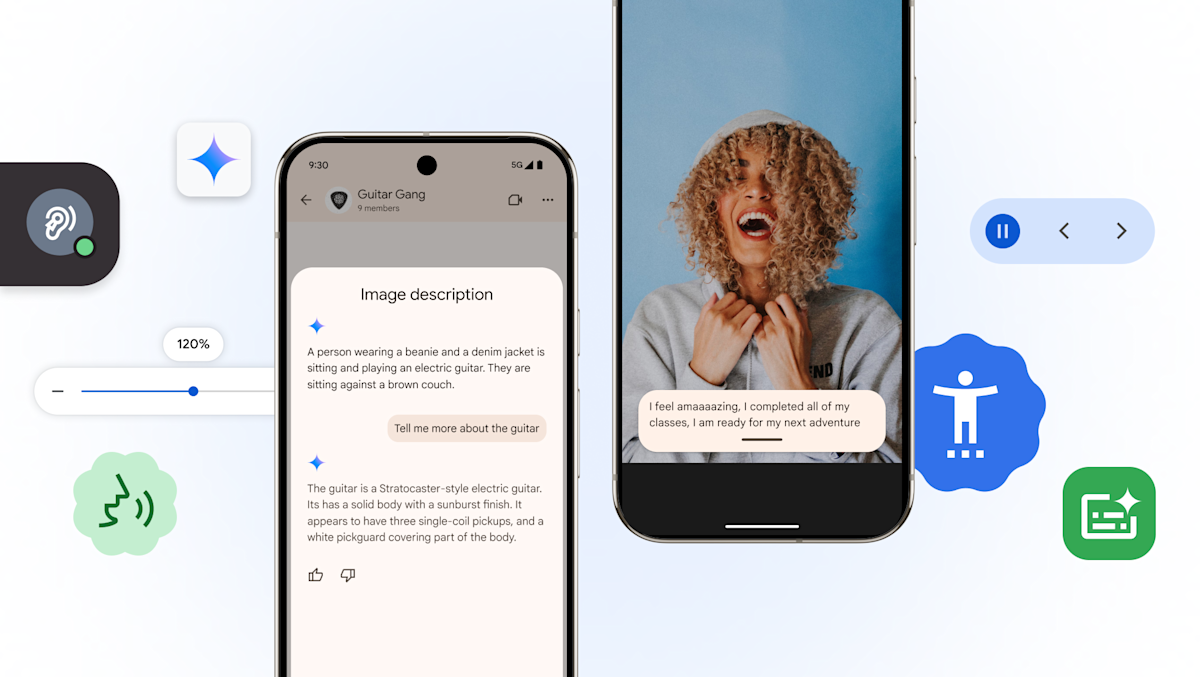

Today Global Accessibility Consciousness Day (GAAD) and, as in previous years, many technological companies are noted on the occasion of the announcement of new auxiliary features for ecosystems. Apple rich Things are rolling Tuesday and now there’s Google to join in the parade. To start, the company, the internal screen reader of Android, made talks more useful. With the help of Google’s twin models, negotiations can now answer questions about the images shown on your phone, and even do not have subscriptions that describe them.

“This is the next time you can get a description of a friend’s new guitar, and ask questions about makeup and color or chief,” said Google. Gemini can understand and understand through the model-built modes of the image. In addition, Q & A functionality works on the entire screen. So, for example, tell me you shopping some online, first to describe the color of the piece of clothing you are interested in your phone and then ask if it is on sale.

Also, Google spreads a new version of expressive headlines. First Announced at the end of last yearThe feature creates subtitles trying to seize the emotion of the speakers. For example, if you are talking to some friends and one of them joking a lame, your phone will not only be subtitles, but they will include “[groaning]”When someone is dragging the voice of someone with the new version of the transcription, expressive headlines, this will follow the right transcription when you follow a live football match.

The new version of expressive inscriptions works on Android 15 or older, the United States, England, Canada and Australia.

[ad_2]

Source link