Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join our daily and weekly newsletters for the latest updates and exclusive content in the industry’s leading AI coverage. Learn more

The first generation of the Internet returned back to the late 1990s, search was good, but it was not excellent and it was not easy to find things. It led to the rise of syndication protocols to ensure simplified and other content that can be easily and searched for web site owners in early 2000s, with Antomies and RSS (really simple syndicate).

A new protocol group has a group to serve the same main purpose in the modern era of the EU. This time, instead of easing places to find people, it’s all things to facilitate websites for AI. Anthropicaltoward Model Control Protocol (MCP)(The Googletoward Agent2agent and large language models / llms.txt are between existing efforts.

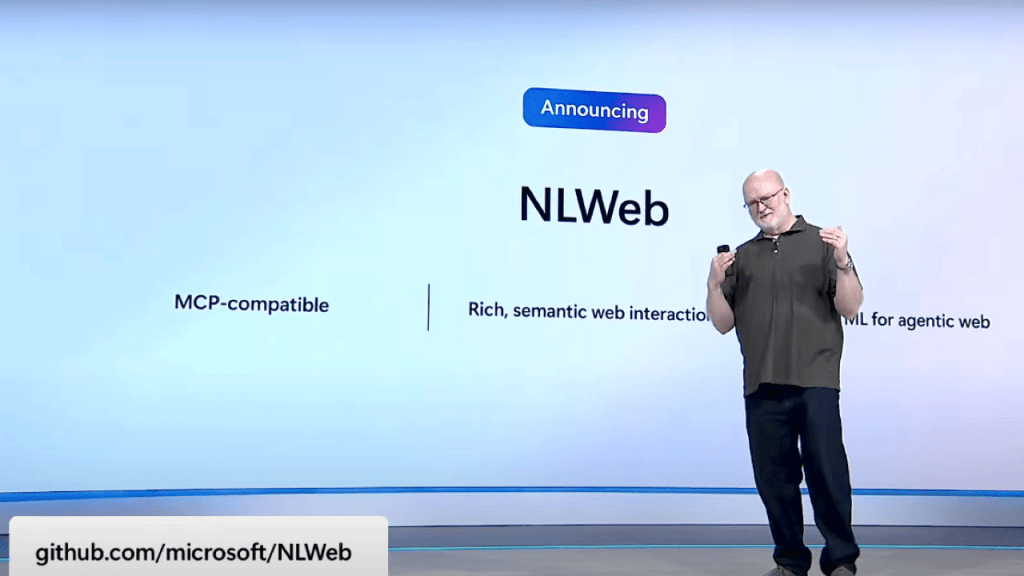

The latest protocol is an open source NLWeb (natural language website) announced during the 2025 conference of Microsoft. NLWeb is also directly related to the first generation of web syndication standards, which helps to create RSS, RDF (Resource Descriptive Framework (Resource Descriptive Framework) schema.org.

NLWeb websites easily convert to an AI application that users can use the content of the natural language to easily add any website. Nlweb is not necessarily about to compete with other protocols; On the contrary, it is superior to them. The new protocol uses existing data formats such as RSS and each NLWeb instance functions as a MCP server.

“The idea behind Nlweb is a way for anyone who is an API to make the website or API very easily,” said Microsoft CTO Kevin Scott 2025 during the main key. “You can really think about it a little as an HTML for the agent of the internet.”

Nlweb converts websites through a flat process built on existing web infrastructure when using modern AI technologies.

Building on existing data: The system begins with the use of the latest websites, including other semi-structured formats, including webpages in general. This means that broadcasters do not need to completely rebuild the content infrastructure.

Data processing and storage: NLWeb, this structured data includes tools to add to vector databases that provide effective semantic search and search. The system supports all major vector database options that allow developers to choose the most suitable solution for technical requirements and scale.

AI Expansion layer: The LLS then increases the information stored information with external knowledge and context. For example, when a user asks about the restaurant, the system automatically combines geographical concepts, reviews and related information, combining comprehensive, intelligent answers to provide comprehensive, intelligent answers to the reviews and related information.

Universal interface creation: The result is a natural language interface that serves both the human user and AI agents. Visitors can ask direct English questions and receive spoken answers, AI systems can access the program program and request the program through the MCP frame.

This approach allows you to participate in the website that arises from any website without requiring extensive talots. This creates a search and interaction with the EU, as in the first days of the Internet to create a key website.

There are many different protocols in the AI space; Not all do the same.

Google’s Agent2AgentFor example, everything is everything to ensure agents talk to each other. This is aimed at coordinating and communicating the agent of the AI and focused on existing websites or AI content. Maria Gorskikh, founder and CEO AIA and a contributor Project Nanda Team on MIT, Google’s A2A was explained to Venturebeat, which allows the structured task to use agents that used models with defined schemes and lifetimes.

“Although the protocol is open source and model-agnostic, its current meetings and tools are closely closing the GEOG Gemini Stack – is more common in the back orchestration framework for web-based interfaces.

Is making another improvement Llms.txt. The goal is to help the LLMS access to better access to web content. While on the surface, it can sound like a bit like nlweb, not the same thing.

“Nlweb does not compete with llms.txt; is more comparable to website collapse tools trying to set the intention from a website,” Michael Ni, VP and principled analytical venture in the zodiac.

Krish Arvapally, co-founder and CTO Nappier, Llms.txt, LLM presented a branded format with a training permit that helps to adopt the content of draggars properly. Nlweb focuses on application of real-time interactions on a direct broadcaster’s website. DapPier, RSS broadcasts and other structures have their own platform that automatically introduces, branded, installed spoken interfaces. Publishers can get the information on the market.

MCP is another major protocol and an increasingly facto standard and the basic element of NLWeb. Basically, MCP is an open standard to combine AI systems with data sources. NI explained that in Microsoft’s landscape, McP is a transportation, here, MCP and NLWeb provides TCP / IP on the Internet of HTML and Open Agent.

Forrester Head Analytics McKeon-White will see a number of advantages for NLWeb over other options.

“The main advantage of NLWeb said,” how “views McKeon-White VentureEat, which allows you to imagine AI systems, better navigation and tools,” he said. “It can also reduce both mistakes, as well as reducing the interface, but also reducing the interface.”

Microsoft just didn’t throw nlweb on the proverbs and hoping for someone to use.

Microsoft has many organizations in Nlweb to make Nlweb, Chicago, Chicago social media, allrecipes, event, hearing (flavor), o’reilly media, tripadvisor and shopping.

O’Reilly Media General Technology Officer Andrew Odewahn, among the early adoptions and sees a real promise for Nlweb.

“Nlweb uses the best practices and standards developed on the open Internet in the last decade and present them to the LLMS,” said Odewahn Venturebeat. “Companies have spent a lot of time optimizing such metadata optimization for SEO and other marketing purposes, but now they can take advantage of this richness of this information to be more intelligent and more skilled with NLWeb.”

According to him, NLWeb is valuable for both the consumers of both public data and personal information for the publishers. He noted that almost every company has sales and marketing efforts, “What does this company do?” or “What is this product?”

“Nlweb, you don’t have to find this information an excellent way to open your internal LLM, and you have to hunt to find it to find it,” said Odewahn. “As a publisher, you can add your own metadata using Schema.org Standard and use NLWeb as an MCP server available for internal use.”

Nlweb is definitely a heavy elevator to use. Odewahn noted that many organizations are likely to trust many standard NLWeb.

“There is no negative side to try this because NLWeb can work inside your infrastructure.” “It’s an open source program to gather the best in open source data so that’s why you will lose and don’t have much to try.”

Zodiac research analyst Michael Ni NLWeb has some positive viewpoint. However, this does not mean that the enterprises should be taken immediately.

Ni noti noted that NLWeb is in the very early stages of junior age and the enterprises should wait 2-3 years for any reasonable reception. He can search the pilot with the ability to attract and help the standard, leading external companies, which are special needs as active market places.

“This is a visually impaired specification, but before reaching the main enterprise pilots, the ecosystem approval, application tools and reference integrations are needed,” he said.

Others consist of a slightly aggressive view for adoption. Gorskikh offers to accept an accelerated approach to ensure your institution retarded.

“If you are a large content surface, an enterprise, domestic knowledge base or structured data, is a smart and necessary step to stay in the piloting NLWeb,” he said. “This is not the moment of waiting and vision – it looks more like the early admission of APIs or mobile applications.”

He said that regulated industries should be carefully noted. Sectors such as insurance, banking and health, should prevent the use of production until there is a neutral, centralized inspection and exploration system. There are already early step efforts – for example, an open, centralized note and penetration system for agent services in Gorskikh as a Nanda project, which is attended by the Quran MIT.

Nlweb water waters and technology should not be ignored for AI leaders for the enterprise.

AI will interact with your site and allow the EU. Nlweb will be especially attractive for publishers, many such as RSS should be for all websites in the early 2000s. After a few years, users will only expect to be there; Agentic AI systems will also look for and wait for what can be included in the content.

This is the promise of NLWeb.