Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

Nvidia The AI chips are spread to information centers and the world of AI factories and companies around the world declared Today, his Blackwell chips lead the AI criteria.

NVIDIA and its partners are training and acceleration of training and placement of the next generation AI applications that use the most recent advances.

NVIDA Blackwell architecture is set to meet advanced performance requirements of these new applications. In the last round of MLPERF training, the 12th of the 2018 application of 2018, the NVIDIA AI platform gave the highest performance on each criteria and the toughest large language model of Benchmark (LLM) – Llama 3.1 405B was allegedly.

The NVIDIA platform is the only one that only results in a wide range of AI business loads, recommended systems, multimodal LLMS, object detection and graphical networks, which offer results in each MLPERF training.

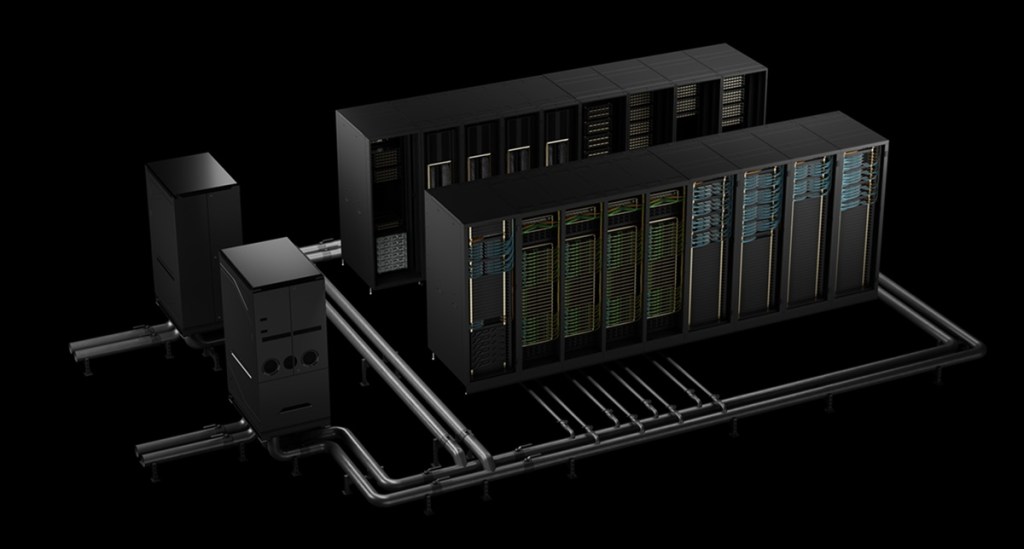

The NVIDIA has used the two AI supercomputs equipped by the Blackwell Platform: NVIDIA GB200 NVL72 NVL72 used NVL72 RAC -CE scale systems and NYX, NVIDIA DGX B200 systems based in Tyche. In addition, a total of 2,496 Blackwell GPU and 1,248 NVIDIA Grace, Coreweave and IBM, Coreweave and IBM collaborated with Coreweave and IBM to present the results of the GB200 NVL72.

The appraisal of the new LLAM 3.1 405B, Blackwell has performed 2.2 times more compared to the previous generation architecture on the same scale.

Llama 2 70B Lora In a subtle adjustment benchmark, Nvidia DGX B200 systems working with eight Blackwell GPU, provided 2.5 times more performance using the same number of GPUs in the previous round.

This performance leaps, high-density liquid-chilled racks, 13.4TB corresponding memory, Fifth generation NVIDIA NVIDIK and NVIDIA NVIDA-2 Infiniband network, NVIDIA Nemo Framework Program in the NVIDIA Nemo Framework Program Admission to the next generation to bring agent EU applications to the market Lift the bar for Multimodal LLM training.

This agent AI-powered applications will last a day in AI factories – agent AI economy engines. These new applications will produce tokens and valuable intelligence that can be applied to almost every industrial and academic domain.

The NVIDIA Data Center platform includes GPUs, CPUS, high-speed fabrics and network, as well as a software such as NVIDIA CUDA-X libraries, Nemo framework, NVIDIA TENSORT-LLM and NVIDIA DYNAMO. This highly regulated ensemble of supply and software technologies allows models to speed and place faster, significantly accelerating models faster.

The NVIDIA partner ecosystem was widely participated in the MLPERF round. In addition to the presentation with Coreweave and IBM, other attractive presentations were Asus, Cisco, Giga Computing, Lambda, Lenovo Quanta Cloud Technology and Supermicro.

The first MLPERF training presentations using GB200 were developed by the MLCommons Union with more than 125 members and branches. Its time is a model that meets the train metric training process that meets the required accuracy. And its standard benchmark work provides comparisons of apples apples to apple. Reviewed before publishing results.

Dave Salvator is someone I know when it is part of the technological press. Now it is the director of accelerated computing products in accelerated computing group in NVIDIA. In the press briefing, Salvator Nvidia CEO Jensen Huang, said that this concept of scale laws for the AI said. These include pre-training you teach the AI model knowledge. This begins from scratch. The salvator said that a heavy calculation elevator, a spine of AI.

From there, NVIDIA is nurturing in post-training. Models are a kind of school and this is a different model to teach a pre-made model for a pre-made model designed for a subtle data set, is a different model to teach a custom data set.

And finally, there are times when the test scales or justification or sometimes called long thinking. The other term of it continues Agent AI. In fact you have a question and have a relatively simple answer and have a reason that can solve the cause and problem. The test term scaling and justification can actually work on more complex tasks and deliver a rich analysis.

And then there is also a generative AI that can create content that can include text generalization translations, but then visual content and even audio content. There are a large number of scale types that are ongoing in the EI world. For the assessments, NVIDIA is directed to pre-trained training.

“This is where the EU said, and then you have placed these models, and then you have placed these models, and then you have placed these models.

Mlperf Benchmark is in the 12th round and in the date until 2018. The consortium has more than 125 members that support it and was used for both non-account and training tests. The authors of the industry sees as firm.

“I am sure that many of you are aware of, sometimes performance claims in the AI world can be a little wild west. Mlperf tries to bring an order for this chaos,” Salvator said Salvator. “Everyone should do the same. Everyone in terms of combinations is equal to the same standard. And once after the results are submitted, these results are reviewed and can be reviewed by all other presenters.”

The most intuitive metric around the training, how much time it takes to raise the AI model, which is awarded to what is called approximation. Means to strike a certain level of accuracy. Compared to this apples, the salvator said and the constantly changing workload is taken into account.

This year, the new LLAM 3140 5B has a new LLAM 3,140 5B workload that replaces the Chatgpt 170 5B workload in the benchmark. In the assessments, Salvator NVIDIA notes a number of notes. NVIDIA GB200 NVL72 AI factories are fresh from fictional factories. The next (Hopper) saw a generation (Hopper) next (Blackwell), Nvidia, the image has improved 2.5 times for generation results.

“We are still quite early in the life of the Blackwell, so we will get more performance over time with Blackwell, because we continue to enter our program optimization and enter the market,” Salvator.

He noted the only company presented by NVIDIA entrances for the criteria.

“Our achieving is coming with a combination of great performance. This, along with other general architectural good in Blackwell, conveyed our 2.66 times more in Blackwell, only our performance was possible,” he said.

He added: “According to NVIDIA’s heritage, it has been the longest time as GPU children. Now we refer to as AI factories. Really this is a really interesting journey.”

[ad_2]

Source link