Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join a reliable event by enterprise leaders in about two decades. VB Transform, Real Enterprise AI strategy brings together people who build. Learn more

French AI Darling Mistral takes new releases coming this summer.

A few days after their declaration Calculate the local AI-optimized cloud service MistralThe company has a funded company A small update of open source model in the 24b parameter was released3.1 by jumping in the 3,2-24B instructions from the release – to 2506.

The new version is directly established in the Mistral Minor 3.1, aims to develop specific behaviors such as the following instructions, output stability and sustainability. When the general architectural details remain unchanged, the update presents the target modest of both internal assessments, as well as public trends.

According to the Mistral AI, it is better to follow the exact instructions of small 3.2 and reducing the probabilities of endless or recurring generations – long or uncertain tips reduce a predetermined problem.

Similarly, the template template, especially in the framework of the VLLM, has been improved to support the use of a safer tool.

At the same time, an NVIDIA A100 / H100 80GB GPU can continue a structure with a NVIDIA A100 / H100 80GB GPU, which dramatically opens the choices of enterprises with tight calculation sources and / or budgets.

The Mistral was declared in minor 3.1 March 2025 As a flagship open release in the 1st parameter interval. It offered fully multimodal opportunities, multilingual concept and long-term handling consisting of 128K wood.

In the model, GPT-4O Mini, Claude 3.5 Haiku and Gemma 3, the Mistral and the Mistral, they preferred them in many positions.

Small 3.1, allegations of expiration in 150 verses in seconds and 32 GB of RAM also stressed the effective placement with allegations supported for use.

This release introduced checkpoints by offering flexibility for delicate arrangements in domains such as both the base and domains such as legal, medical and technical areas.

In contrast, it is aimed at surgical developments for small 3.2 behavior and reliability. Does not aim to apply new opportunities or architectural changes. Instead, it plays the role of exploitation: to clean up the edge of the output, instructional compatibility and urgent interaction of the cleaning system.

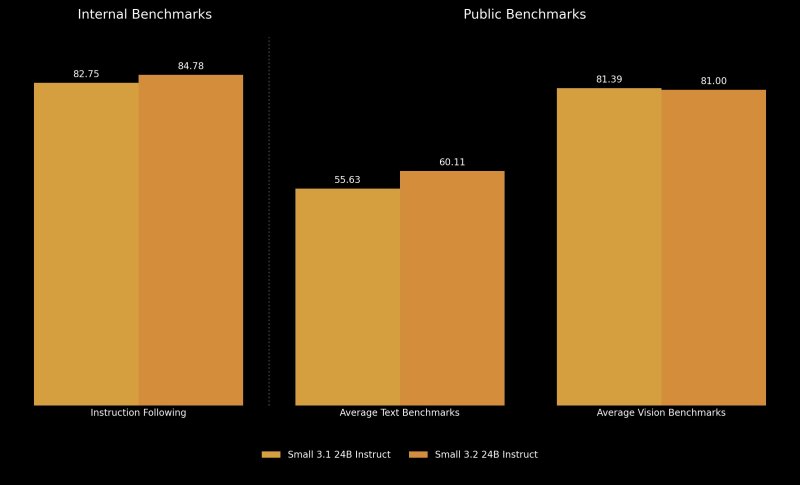

After the instruction, the criteria show a small but measurable development. The internal accuracy of the Mistral increased by 82.75% and 3.1 to 84.78% in small 3.2.

Similarly, the performance of foreign databases such as WildBench V2 and Arena Hard V2 increased by about 10 percentage points, and Arena doubled from 19.56% to 43.10%.

Internal sizes also offer access to exit. The ratio of infinite generations has dropped to 3.29% from 2,11% to 1 to 1. This is more reliable for the application that requires consistent, closed response to the model developers.

The performance between text and coding criteria provides a more nuanced image. A small 3.2, Insaneval plus (from 88.99% to 92.90%), MBPP Pass @ 5 (from 74.63% to 78.33%) and the Simplega. He also modified the results of the MMLU Pro and the mathematics.

View criteria remains mostly consistent, lightly waves. Charta and DOCVGA saw marginalized gains, while Ai2D and Mathvista fell slightly less than two percent. In small 3.2, up to 3.1-80%, the average vision activity decreased by 81.39% to the average of 3.1-81.00%.

With the intention of the Mistral, this is adapted: Small 3.2 model is not a refueling, not an elegance. Thus, most of the criteria are in the expected conflict, and some regressions appear to be trading for developments targeted elsewhere.

However, as AI power users and influence @ Chatgpt21 has been sent in x: “Deteriorate in MMLU”, “Mass Multitask is a multifaceted test with 57 questions designed to assess the extensive LLM performance in domains. Indeed, hit a small 3.2, 80.50%, smaller than 80.62%.

Both small 3.1, both 3.2 and are available under the Apache 2.0 license and can be obtained through popular. AI Code Sharing Warehouse Hug face (a starting itself in France and NYC).

It is supported by frames such as small 3.2, VLLM and transformers and requires about 55 GB of GPU ram to work in BF16 or FP16 accuracy.

For developers who want to build or serve applications, system offers and results are given in the model deposit of examples.

The Mistral Small 3.1 seems to be limited to obtaining a self-service and direct placement in small 3.2, when Google Cloud is already combined with platforms like Google Cloud Vertex AI.

The Mistral is small 3.2, competitive placement in an open model space cannot change, but the Mistral representation of AI is a tional model.

Instructions and tools in the instructions and tools instructions, especially instructions in the task work – especially instructions – Small 3.2 offers a cleaner user experience for developers and enterprises in the auto ecosystem.

The French is a startup and compliance with the rules of the EU as the power of the EU and the rules of the EU, EU ACT Active applies for businesses working in that part of the world.

Again, for the biggest jumps in Benchmark performance, small 3.1, in some cases, in some cases, in some cases, in some cases, do not prefer their predecessor. This provides more stability-oriented option updates than a pure update depending on the job.