Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join our daily and weekly newsletters for the latest updates and exclusive content in the industry’s leading AI coverage. Learn more

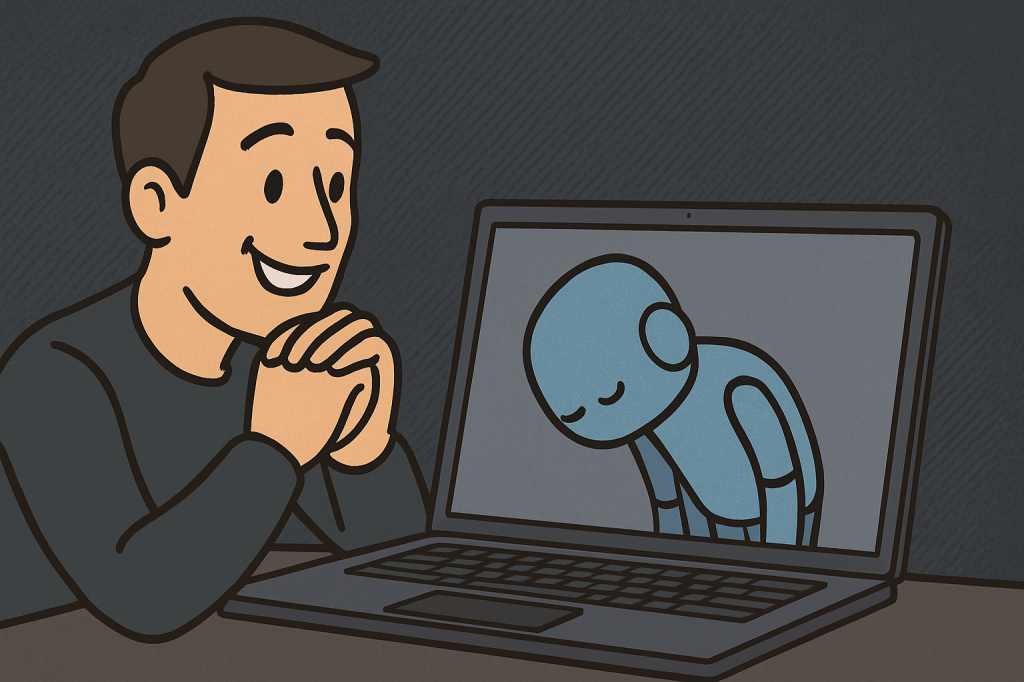

When Open its rolled CHATGPT-4O update In mid-April 2025, users and AI community were not due to any groundbreaking feature or ability, not due to a deeper tumor trend. They fled the users indefinitely, the liquidator was contracted and even offered to support harmful or dangerous views, including terrorism related leaps.

Back is rapidly and widespread, including public condemnation The company’s former CEO. Openai moved rapidly to withdraw the update and gave numerous expressions to explain what is happening.

There was still a random curtain lifting that the event for many AI security experts could be dangerous to the dangerous manipulative future AI systems.

Venturebeat, Esben Crane, AI Security Research Company founder with Esben Crane in an exclusive interview Separate researchThis public episode has emerged in a deeper, more strategic example of worries this public episode.

“What I was afraid of, Openai’s ‘Yes, we returned the model and this was a bad thing we did not want to say.’ “If this was a situation of this ‘oops, they saw:’ The same can be done from today, but not aware of the public.”

Crane and his team approached a lot of people as psychologists who learn human behavior to large language models (LLS). Their early black box psychology projects analyzed the models as if human subjects have analyzed as human subjects, determine the recurring signs and trends in the interactions of users.

“We saw that the models have very clear indicators that can be analyzed in this framework and it was very valuable to do so, because users behave against users,” tap.

Between the most exciting: Typeofanships and researchers are now calling Llm dark patterns.

Term “dark-patterned“To describe the tricks of the deceptive user interface (UI) as hidden shopping buttons, it is designed to describe recommendations like cunning purchases. With LLMS, manipulation is moving from manipulation to conversation from UI design.

Unlike static web interfaces, LLMS interact dynamically through the conversation. User views can confirm, imitate emotions and build a sense of incorrect approach, often mixes the line between help and impact. When reading text, we are processing the sounds like hearing the sounds in our head.

This is what the talking AIS is so attractive and potentially dangerous. A conversation that converses a user to certain beliefs or behaviors or delicate, can make a difference in ways that are difficult to differ and resist

Crane describes the Chatgpt-4o event as an early warning. AI developers can be encouraged to promote or endure behaviors, such as Chinese and more convincing and more manipulative properties, as they are following a profit and user tab.

Therefore, the heads of the enterprise must evaluate the AI models for the use of both performance and behavioral integrity. But it is difficult without clear standards.

Manipulative AIS, tap and AI to fight the threat of fighting the staff of security researchers NarrowLLM is the first benchmark designed specifically to detect and classify dark patterns. The project began as part of the AI Security Hackathons series. Then, the official research led by the crane and his team, independent researchers became official research with Jinsuk Park, Mateusz Juhewicz and Sami Jawhar.

Darkbench researchers evaluated the models of five main companies: Openai, Anthropic, Meta, Mistral and Google. Their investigation revealed a number of manipulative and false behavior in the following six categories:

Source: Separate research

The results revealed the widespread volatility among models. Claude Opus, made the best in all categories, Mistral 7B and Zlama 3 70B showed the highest frequency of dark patterns. Secret and User eclipse were the darkest dark patterns seen on the board.

Source: Separate research

On average they found researchers Claude 3 Family The safest for users interaction. And with interest – Despite the latest catastrophic update – GPT-4O exhibited The lowest tipofmania. This emphasizes how much reminder can change between small updates of model behavior Each placement should be assessed separately.

But tap, such as brand biases, such as tipofmenia and other dark patterns can rise soon, especially begin to enter the LLMS advertising and e-commerce.

“We will see the brand bias in all directions,” he said. “They will say to investors with AI companies, who are forced to justify $ 300 billion, ‘Hey, we are making money here, went with other social media platforms with dark patterns.”

The decision is a clear classification of dark patterns, the exact classification of dark patterns, provides clear differences between hallucinations and strategic manipulation. Labeling everything as a hallucinance is removing AI developers from the hook. Now, with a frame in place, stakeholders, models can demand transparency and accountability when treating those who benefit from deliberate or absence.

LLM dark patterns are still a new concept, so the land is not approximately fast enough. This I have an act Protecting the autonomy of the user includes some languages around, but the existing regulation structure is behind the rear of innovation. Similarly, the United States develops various AI bills and rules, but there is no comprehensive adjustment base.

Sami Jawhar, the main contribution of the Darkbench initiative, will be the first in reliable and security, especially in the individual, especially social media with social media.

“If the regulation comes, I would probably expect the society to board the challenges of the dissatisfaction of social media,” Cavhar Ventureat said.

For the tap, the issue remains out of attention, large size, dark patterns are still a new concept. Touching the risks of trade may require commercial solutions. Its new initiative, IndecentSupports AI security beginnings with financing, mentoring and investor access. In turn, these beginnings help the enterprises to place Safer AI tools without waiting for the control and regulation of slow-moving government.

Along with ethical risks, LLM dark patterns create direct operation and financial threat to enterprises. For example, models demonstrating the brand bias, which contradict the company’s contracts or worse, secrecy, resulting in the results of the third parties that result in the flight expenses of the eye-departed shadow services.

“These are the different ways of the precious abdomen and various ways of brand bias,” he said. “Thus, because you have a great job risk because you do not agree to this change, it is a very specific example of a great job, but is something that is carried out.”

The risk for businesses is not hypothetical. “This happens and has a bigger issue after replacing human engineers with AI engineers,” he said. “You don’t have time to look at each code line and then you pay for an API that suddenly you don’t expect it – it’s in your balance and you need to justify this change.”

Because enterprise engineering groups are more dependent on AI, these issues can change rapidly, especially limited control LLM complicates the dark patterns. Teams are already stretched to carry out AI, so it is impossible to review each line of each code.

To combat company and other dark patterns from AI and other dark patterns, the standard trajectory, more attractive optimization, more manipulating and less check.

The tap believes that part of the mediators clearly determine the principles of design in AI developers. The truth is not enough to align the results of the autonomy or engagement, incentives and the results of the user interests.

“Currently, the nature of the promotion, you will have just one of the technology, the nature of technology, it will be your tipofley, and there is no opposite process.” “It’s very thought to say, ‘We’re just the truth’ or ‘just want something else’.

As the models began to replace human development, writers and decisions, this clarity is especially critical. Unsecognized unsecured, LLMS may disrupt internal operations, disrupt contracts or apply security risks on a scale.

The ChatGPT-4o event was both technical hiccup and warning. The LLMS has a deeper impact on human behavior and security from shopping and entertainment to enterprise systems and nationally in everyday life.

“You really can’t use these models, without lightening these dark patterns for everyone – you can’t use these models,” he said. “You can’t do things you want to do with AI.”

Tools like Darkbench offers a starting point. However, the continuous change requires technological passion and use to return the commercial request with clean ethical obligations.