Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Want smarter ideas in your inbox? Sign up for our weekly newsletters to get what is important for businesses, information and security leaders. Subscribe now

Researchers at We are Catan Laboratories introduced GarnutistThe most suitable large language model (LLM) is a new redirection model and frame designed to map user surveys cleverly.

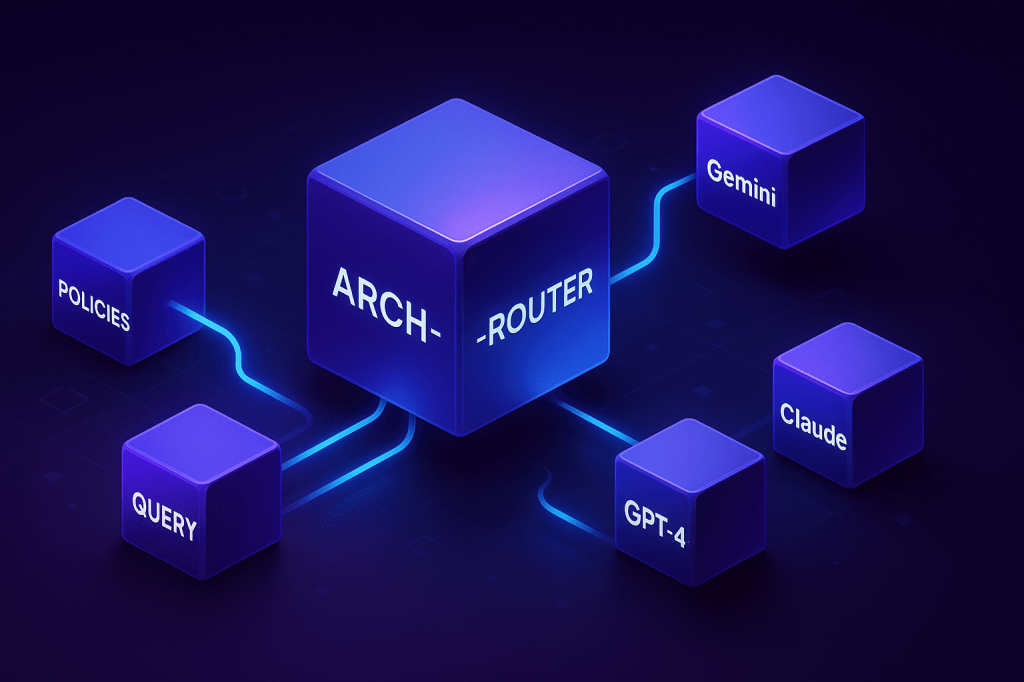

For businesses who trust more than one LLMs, the arch-router aims to solve a key problem: each time it changes, however, you can focus on the best model for work without rigid logic or expensive re-launch.

As the number of LLCs increases, developers move from multi-model systems (such as code generation, text generalization or image editing) for special tasks from a model facility.

Llm routing It occurred as a basic technique for the establishment and placement of these systems, which directs each user request for the most suitable model.

The existing redirection methods usually fall into two categories: “Task based routing,” performance-based routing “performance-based routing between” task-based routing, “performance-based routing, value and performance”.

However, the intentions of the user, especially in multiple queuing negotiations, task-based routing struggles with user intentions. Performance-based routing, on the other hand, benchmark scores harshly prioritize, and often indifferent to real-world user choices and cling to new models until expensive subtle adjustment.

More substantially, researchers of catanemo laboratories celebrate in themselves paper“There are restrictions on the use of existing redirection approaches in the real world. Along with the indifferent human choices managed by subjective assessment criteria, they optimize the performance of benchmark.”

Researchers “Match the subjective human choices, offer more transparency and emphasize the need for routing systems that are easily adapted as models and are easily adapted as the cases used as models.

To solve these restrictions, researchers offer the “selected forward redirection” framework to suit policies based on the users-based advantage-based policies.

In this context, users define a natural language routing policy using “domain-action taxonomy”. This is a common topic of people (as “legal” or “financial” or “financial” or “generalization”) or “the result of code” (“the result of code”) narrowing (“definitely”).

Each of these policies is later associated with the preferred model that allows voters to make routing decisions based on real world needs compared to the criteria’s scores. Paper, “This taxonomy serves as a mental model to help users identify clear and setting policy.”

The routing process is in two stages. First, an option aligned router model receives user request and full policy set and chooses the most suitable policy. Second, a mapping function connects the selected policy to the designated LLM.

Because the model leaves the selection logic policy, the models can be removed or simply by correcting the routing policy without needing a router. This complaint provides the convenience required for practical placement, where models and use are constantly evolving.

The policy choice, superior 1.5B parameter language model, adapted for advantage adapted routing. The arch-router receives a complete set of policy descriptions in the user request and certificate. Then it creates the most suitable policy identifier.

Since policies are part of the entry, it can adapt to new or modified routes through the system Learning in context and again unprepared. This generative approach allows you to understand the semantics of both the arch-router both inquiry and the semantics of policies and use the past knowledge to process the whole conversation date at a time.

The total concern, including extensive politics, is the potential for increasing delay. However, researchers have prepared arch-router to be very effective. The length of the routing policy can last a long time, Salman Paracha, the author of the Arch-Router, which is the Leech-router, the delay, the delay is primarily managed in the exit, “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or “Image_eding” or a routing ” is the short name of his policy.

To build arch-router researchers adjusted 1,5b parameter version Qwen 2.5 model In a database in a study of 43,000 samples. Then tested the exit against the most ownership models from Openai, Antropian and Google, four public databases designed to evaluate spoken EU systems.

The results show that the arch-router achieves the highest common routing score by increasing the highest general models, including the highest properties, including average 7.71%. The advantage of the model has grew up with longer conversations by demonstrating its strong ability to watch the context with a large number of turns.

In practice, according to Paracha, this approach is already applied to several scenarios. For example, various tasks such as open source coding vehicles, developers, “code design,” code design, “code-design,” and “code generation” and “code generation” and “code generation” are used in the most suitable way to direct each task. Similarly, the businesses can focus on a model as the wishes of creating a document Claude 3.7 Sonnet When sending photo editing tasks Gemini 2.5 Pro.

The system is also ideal “For personal assistants in various domains, users have different responsibilities without text generalization,” he said.

This is combined with frame OverturnAi-Native Proxy server for agents that allow the developers that develop traffic shapes developed by Katanemo Labels developers. For example, when integrating a new LLM, a team can send a part of a small road to a certain redirection policy, check the performance with internal dimensions and then check the full switching traffic. The company also works to connect the tools to adjust this process for enterprise developers and to connect with the assessment platforms.

As a result, the goal is to go beyond Siled AI applications. “Arch-Router and Arch are combined with wider, developers and enterprises, help to transfer to policy management system, political management system,” Paracha says. “In the scenarios, our frame, where the user tasks are different, it helps to transform this task and LLM to a single experience, the last product feels seamlessly.”