Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Follow previously declared Plans, NVIDIA, Kai Planner, including AI platform, said it is a AI platform.

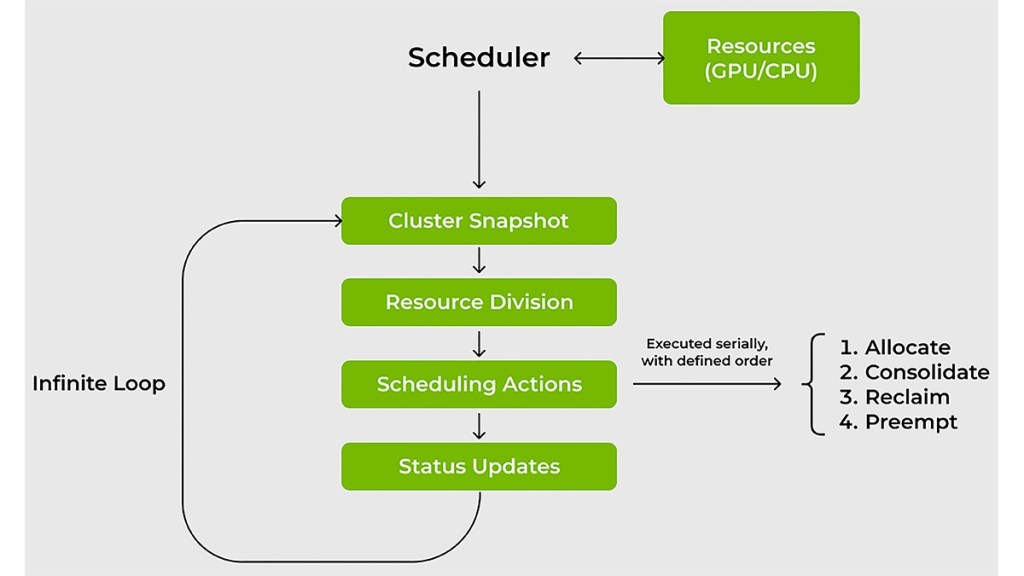

Scheduler is a Kubernetes-native GPU planning solution that is already available under the Apache 2.0 license. Initially developed within the run: AI platform, Kai planner is also packaged for society, as well as shipments and delivery of the NVIDIA Run: AI platform.

Nvidia said that this initiative was committed to both open source and enterprise, active and promotion of an active and common society, promoting an active and common society, promoting a society, promoting and contributions, and promoting the contributions,

Contact and Innovation.

In their position, Nvidia’s Ronen Dar and Ekin Karabulut gave general information to the technical information of Kai planner, for this, emphasizing the value and the value of ML teams and explained the cost and actions of the planning.

AI provides a number of problems that traditional resource planners can often manage workloads in GPU and CPU. Scheduler is designed to apply special to these issues: to manage GPU’s requirements; The waiting period decreased for calculation entry; Resorting resource guarantee or GPU; and misaligning AI tools and frames.

AI can change the workload speed. For example, you may need only one GPU for interactive work (for example, for data research) and then suddenly a few GPUs require distributed training or multiple experiences. Traditional planners fight such a variable.

Kai planner continuously separates fair sharing values and automatically regulates quotas and restrictions in accordance with the current workload requirements. This dynamic approach helps managers to provide effective GPU allocation without interference with permanent hand.

For ML engineers, the time is itself. Scheduler reduces the waiting period by combining a hierarchic turn that allows you to provide network planning, GPU sharing and then to provide work groups and then to work on step-by-step work and is sure to work with priorities and justice alignment.

More optimize resource use, even in the face of changing demand, planner

Both GPU and CPU work for two effective strategies for workload.

Bin-packaging and consolidation: Increases the use of calculation by combating resource

Smaller tasks that partially use the partially used GPU and CPU

Redistributing the tasks between the intersections and splitting the knot.

Distribution: Nuts to minimize knots or equivalents of workloads between GPUs and CPUs

Each node increases the resource availability for a workload and maximize.

Some researchers in shared clusters provide more GPUs to ensure the presence of the day. This experience can lead to unused sources, even when other teams are still unused quotas.

Kai Scheduler addresses this by applying these resource guarantees. This ensures that the AI practitioners are dynamically redistributed to the separated GPUs and dynamically other workload. This approach prevents the resource hogging and promotes the efficiency of total majority.

AI can be awesome to close the workloads with various AI frames. Traditionally, the teams face the manual configuration maze to bring together tools with tools such as Kubeflow, Ray, Argo and training operators. It delays the prototype of complexity.

Kai Scheduler, these tools and frames, adjusting configuration complexity and the complexity of the computing, combining the internal Podgrouper, which is automatically discovered and connected to the internal Podgrouper, it also presents a PodgrouPup.