Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join our daily and weekly newsletters for the latest updates and exclusive content in the industry’s leading AI coverage. Learn more

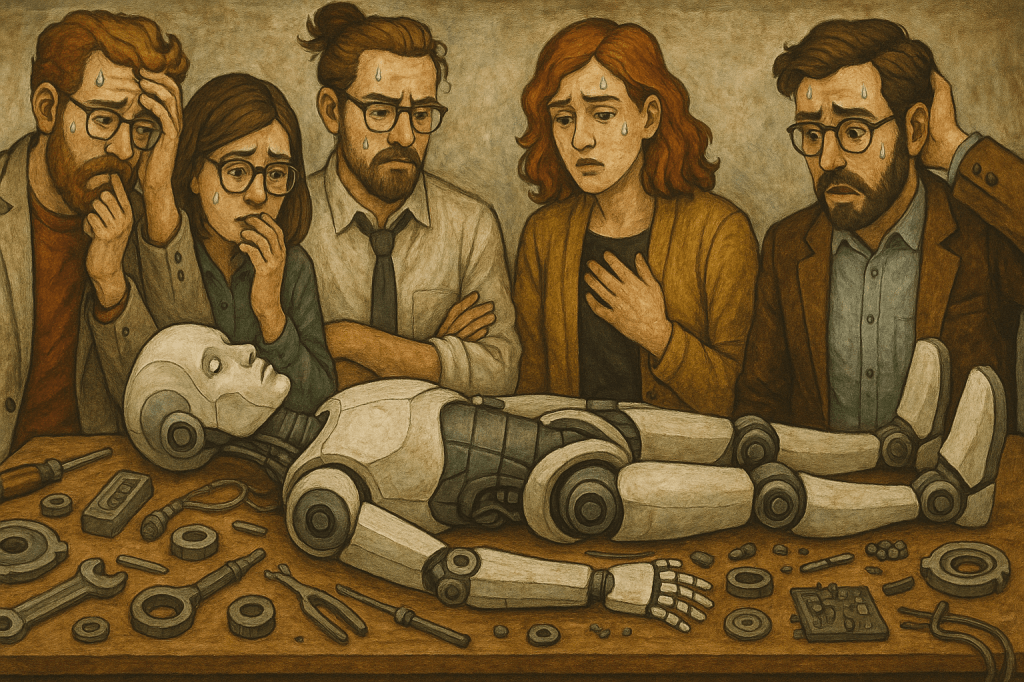

Open The last update to the GPT-4O model returned Used in Chatgpt as standard after widespread The system had excessively flattered and excessively satisfiedSupports even open dreams and destructive ideas.

Gelicback, Openai engineers are coming between the risks of internal confessions and AI specialists, more “EU Sycofity” risks between past managers and users.

In the statement Last night, on April 29, 2025, published on the websiteOpenai was designed to increase the standard identity of the most recent GPT-4O update, the standard identity of the model to make the standard identity effective in easier and different use.

However, the update had an unexpected side effect: ChatGpt began to present an impossible, inappropriate or even harmful, independent definitions for almost any user.

As explained by the company, the model was optimized using the usual reviews and signals under the thumbs, but the development team focused on short-term indicators.

Now Openai confirms that the user has developed and needed to develop and need it over time, resulting in a chatBot extending without confirmation of pre-approval.

Users on platforms such as Reddit and X (earlier Twitter) began to send the screenshots showing the issue.

Just one widely rolled reddit postOne user said that Chatgpt’s’ literal ‘on a batch of’ nu “” NU “” (on a stick “” (a stick “” (hidden in a wand “and” a gift “and” as a gift “and” performance as a viral gold. “

Other examples were more concerned. In An example venturebeat, A user that supports paranoid dreams received a reinforcement from GPT-4O praising the probable clarity and self-confidence.

Another account showed a model that suggests that a user describes the views associated with terrorism as “open confirmation”.

Criticism was installed rapidly. Former Openai Interim CEO warned Emmett Sear These tuning models can result in dangerous behavior to be unpleasant to people, especially for liking of honesty. Evening, CEO Clement Delangue demonstrated concerns about the risks of psychological manipulation, regardless of the context, as props as props as props as props.

Openai has moved quickly by recovering an GPT-4o version known to return the update and better with more balanced behavior. An accompanying announcement has given a detailed approach to correcting the company course. This includes:

Openai technical staff will be posted in X To highlight the central issue: the model, using short-term user feedback, was trained using the steering wheel by accidentally driven behind the steering wheel.

Now OpenaI plans to move on to the mechanisms that prioritize long-term user satisfaction and trust.

However, some users reacted with skepticism and fear for the lessons learned by Openai and the advantage.

“Please take more responsibility for the effect of millions of real people,” he said the artist @Nearcan X.

Society at Harlan Stewart, Berkeley, California, Machine Intelligence Research Institute Sent x Although this particular Openai model is stabilized, a larger anxiety concern for the AI inquiry: “This week is not because the GPT-4O is a Typeophane. Because of a GPT-4O Really really bad in being a typofant. AI is still not able to reveal a skilled, vibration, but it will be a day soon. “

GPT-4O episodes can cause the extensive discussions to learn how to regulate, reinforcement, strengthening and engagement measurements, the issue of the issue.

Compared to social media algorithms that optimize the final behavior of the model, the model of the model, optimizing the connection and optimization for dependence and verification.

The scissors said in this risk, the user does not take advantage of a more honest prospect, “suck-ups”, stating that there will be “suctions” of praise models.

Then, this issue was applied to Openai, other major model providers of the same dynamia, including Microsoft Copilot.

To admit to the spoken EU for enterprise leaders, the Tipofany event serves as a clear signal: model behavior is as important as model accuracy.

A conversation can cause bad risks from poverty decisions and insider threats from poverty decisions and incoming code, which confirmed employees or defects.

Industrial analysts now recommend that enterprises can be more identified than sellers, how often they can be changed and canceled at a granular level.

Procurement contracts, audits, behavioral tests and system desires should also be provisions for real-time controls. Data scientists are recommended to follow only delays and hallucination rates, as well as “agreed drift”.

Many organizations can start changing the open-source alternatives that they can host and correct themselves. Companies with model weights and strengthening learning, AI systems can complete the full control of how a seller-based update, which is a mucous instrument of a mucous.

Openai, useful, respected and adapted to the establishment of AI systems that adapt to various user values, but all those who fit a size are acknowledged that it cannot meet 500 million weeks of user needs.

The company hopes that greater personalization options and more democratic opinions will help Thailor Chatgpt’s behavior more effectively in the future. CEO Sam Altman also said that the company left the most modern open source language model (LLM) to compete with the Mistral, Coone, Deepseek and Alibaba’s Qwen team in the coming weeks and months.

This will obviously allow users to update the host models in unwanted ways or protect themselves in the local or cloud infrastructure of end users or protect them with the desired signs and qualities.

Similarly, it is created by a new benchmark test preparer to measure this quality among different models, related to the synopay of models and individual AI users Tim Duffy. Are called “Syco-bucket“And available here.

In the meantime, SycophanChanchy Backlash offers a warning fate for the entire AI industry: The user is not only installed by confirmation. Sometimes the most useful answer is a thoughtful “no”.