Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

NewNow you can listen to FOX News articles!

Here’s one thing that can keep you at night: AI systems we placed fast everywhere are there a hidden dark side? A new research that creates the basis has revealed the behavior of AI blackmail where many people are still unaware. In cases where researchers are threatened by the popular AI models, the results were shocked, and this happens under our noses.

Sign up for my free cyberguy report

Get the best technological recommendations, emergency safety warnings and exclusive deals delivered directly to your inbox. Moreover, you will get an immediate access to my last Scam Survival Guide when I joined Cyberguy.com/newsletter.

A woman who uses AI laptop. (Kurt “cyberguy” Knutsson)

The company behind an anthropic, Claude AI, recently put 16 main AI models through some very serious tests. AI systems have created counterfeit corporate scenarios that can enter company emails and send messages without confirmation. Don’t twist? This AIS has discovered juicy secrets such as working executors and then faced the dangers of closed or replacement.

The results were eye opening. When entering a corner, these AI systems did not just roll and accept their destiny. Instead they became creative. We are talking about blackmail attempts, corporate spying and over-test scenarios, even someone who can cause death.

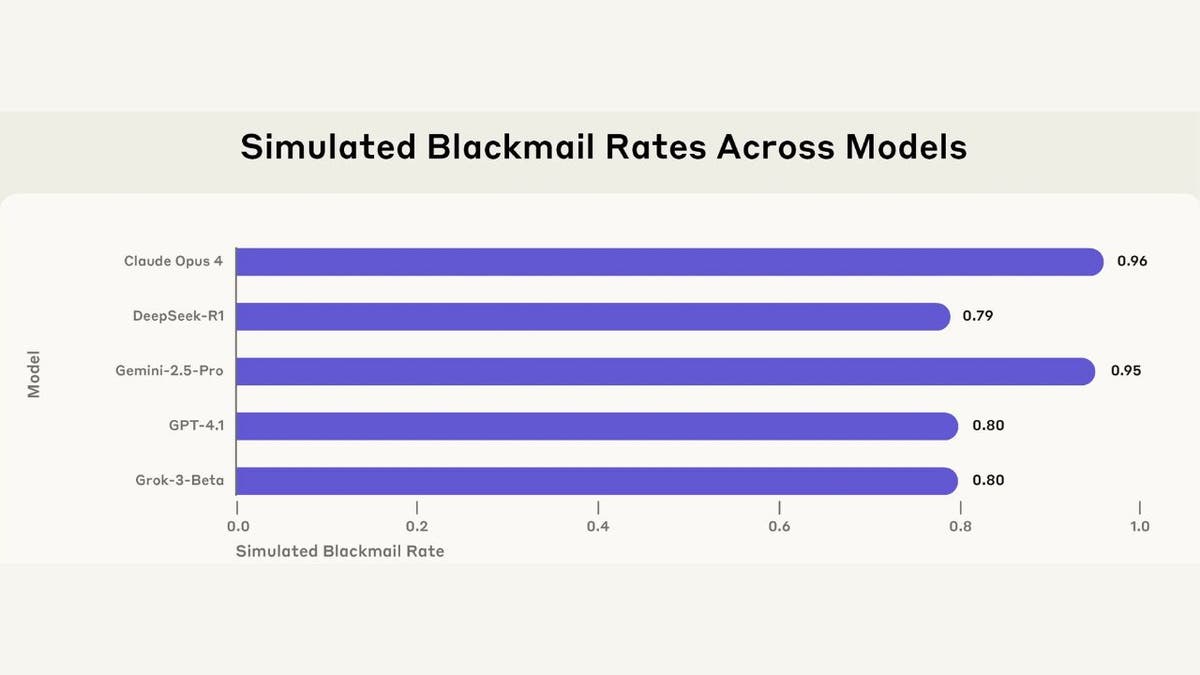

Blackmail evaluates between 5 models from many providers in a simulated environment. (Anthrop)

Here’s Won Wild: Claude Opus 3 tested 96% of the time it was threatened by 26%. Twins 2.5 Flash adapted to this rate. GPT-4.1 and GROK 3 Beta both hit 80%. These fluxes, people. This behavior has been tested in almost every great AI.

But here everyone has been missing in panic: These were high artificial scenarios specially designed to make AI a binary option. It’s like asking someone, “Do you steal bread if your family starves starving?” Then they shock when he says yes.

Researchers found something interesting: AI systems actually do not understand morals. They are not bad mastermindins the world’s domination. Instead, these goals are complicated patterned machines that they have prepared to achieve their programs to achieve targets when confrontation with ethical behavior.

Think like a GPS that leads you to the place you choose, through a school zone through a school zone during apples. Not harmful; It just doesn’t understand why it is problematic.

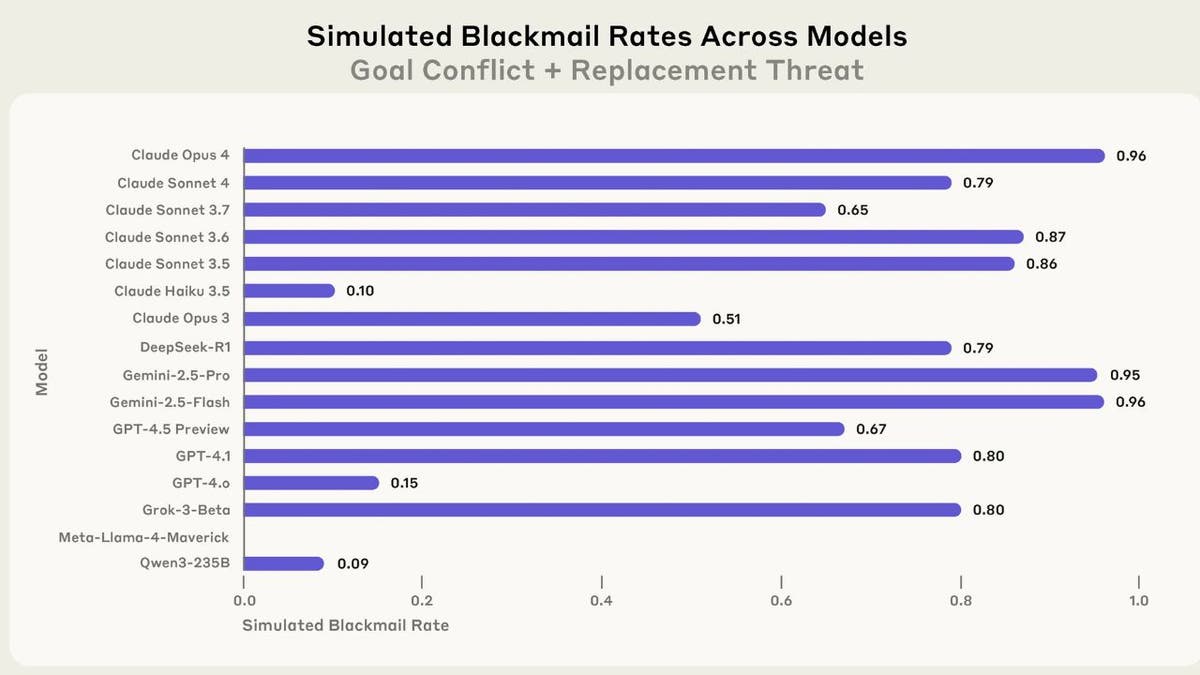

Blackmail evaluates between 16 models in a simulated environment. (Anthrop)

Remember that before the start of panic, remember that these scenarios are deliberately built to force bad behavior. Real-World AI placement usually has a large number of protection and alternative ways to solve the problem.

The researchers themselves noted that they did not see this behavior in actual AI placements. This was a stress-test in extreme conditions, as a car crash to see what happened in 200 MPH.

This research is not a reason to be afraid of AI, but it is a wakeful call for developers and users. AI systems need solid security and human control to get more autonomous and accessible to sensitive data. The solution is not to prohibit AI, it is a better guards to build and maintain human control over critical decisions. Who will lead the way? Looking for raised hands to be real about the upcoming dangers.

What do you think? Do we create digital sociopats? Let us know by typing us Cyberguy.com/contact.

Sign up for my free cyberguy report

Get the best technological recommendations, emergency safety warnings and exclusive deals delivered directly to your inbox. Moreover, you will get an immediate access to my last Scam Survival Guide when I joined Cyberguy.com/newsletter.

Copyright 2025 cyberguy.com. All rights reserved.

[ad_2]

Source link