Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

Original version one This story appeared How many magazines.

Great language models work well because they are very big. Openai, Meta and Deepseek’s latest models use hundreds of billions of “parameters” – use the connections between the information and use the connections between the information and the ties of the connections. With more parameter, models can determine the samples and connections that make them stronger and more accurate.

But this power comes at a price. Hundreds of billions of parameters receive large calculation sources to prepare a model. For example, on Google to train its twins 1.0 ultra model $ 191 million. Large language models (LLS), each time you respond to a query, are notorious energy hogs of them. A request for Chatgpt consumes about 10 times According to the Institute of Electricity Research, many energy as a Google search.

In response, some researchers now think small. IBM, Google, Microsoft and Openai released small language models (SLMS) that recently used several billion parameters – part of LLM colleagues

Small models are not used as general purpose tools such as greater cousins. However, they are able to answer patient questions such as Shatbot, and can prefer special, narrower defined tasks such as specifying information as smart devices. “An 8 billion parameter model for a large number of tasks is actually very good,” he said Zico ColterA computer scientist at Carnegie Mellon University. They can work on a laptop or mobile phone instead of a large data center. (There is no consensus related to the exact definition of new models, but new models.

Researchers use a few tricks to optimize the training process for these small models. Great models often break raw training information from the internet and this information can be difficult to discipline, mix and process. However, these great models can then create a high quality data set that can be used to bring up a small model. Knowledge, the distillation approach, as a teacher who teaches a student, takes a larger model to exercise exercise effectively. “Reason [SLMs] Get so good with such smaller models and so little information, the mixtures are used to use high quality information instead, “said Colter.

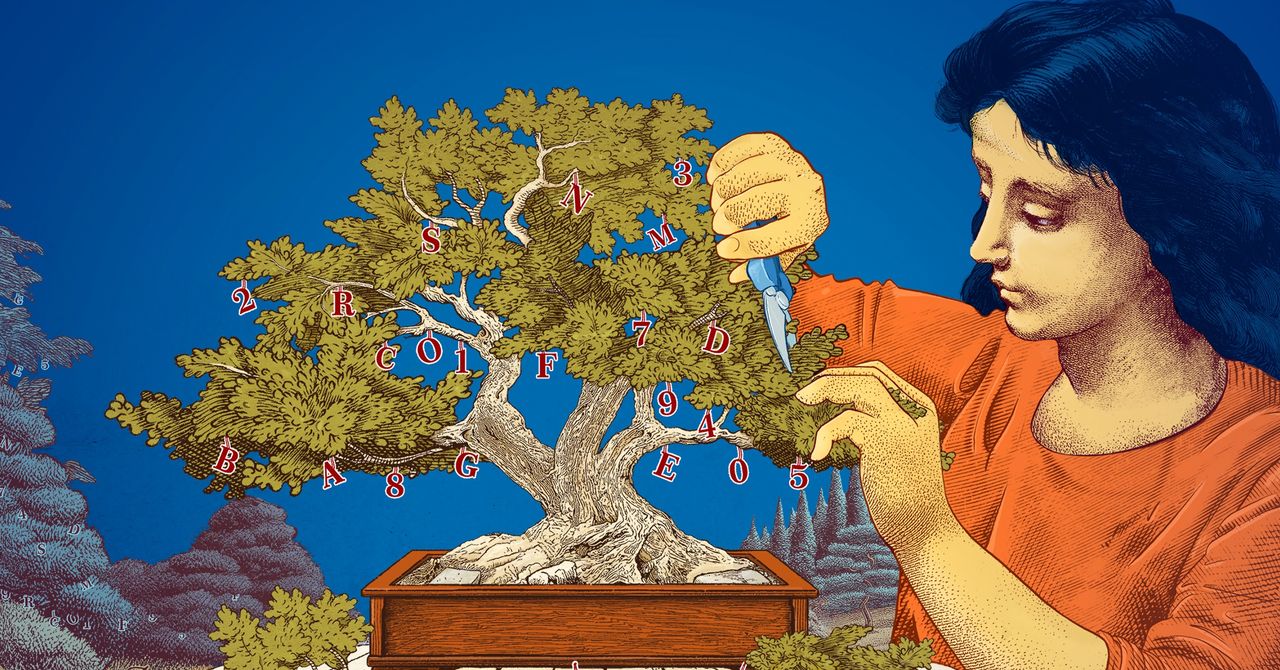

Researchers also explored ways to create small models and create small models by cutting them. A method known as pruning eliminates unnecessary or inefficient parts of A adultererExpansion of combined data points that lie the basis of a large model.

Pruning was inspired by a true nervous network of a human brain, which broke the ties between the centuries of a person between the centuries. Today’s pruning is approaching 1989 Paper Now Lecun, a computer scientist in Meta, defended the effectiveness of the parameters in a trained nervous network. He called the “optimal brain injury” method. Pruning can help researchers to make a small language model for a specific task or environment.

The smaller models of how small models do what language models do, the smaller models offer a cheap way to test the novel ideas for researchers interested in their loved ones. Since there are fewer parameters than large models, their causes may be more transparent. “If you want to make a new model, you should try things” Leshem ChoshenA research scientist in the MIT-IBM WATSON AI LABORATORY. “Small models allow researchers to test with low stakes.”

Great, expensive models, which are increasingly growing parameters, generalized chatbots, Image generators and such as applications and such as applications such as the case-exploration. However, for many users, a small, targeted model will work as it easier for researchers to exercise and set up. “It can effectively save money, save money,” Choshen said.

Original story reprinted with permission How many magazines, An independent publication of the editor Simons Foundation Whose mission developments and mathematics and physical and physical and physical and life sciences include the development of science and increase the understanding of science.

[ad_2]

Source link