Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join our daily and weekly newsletters for the latest updates and exclusive content in the industry’s leading AI coverage. Learn more

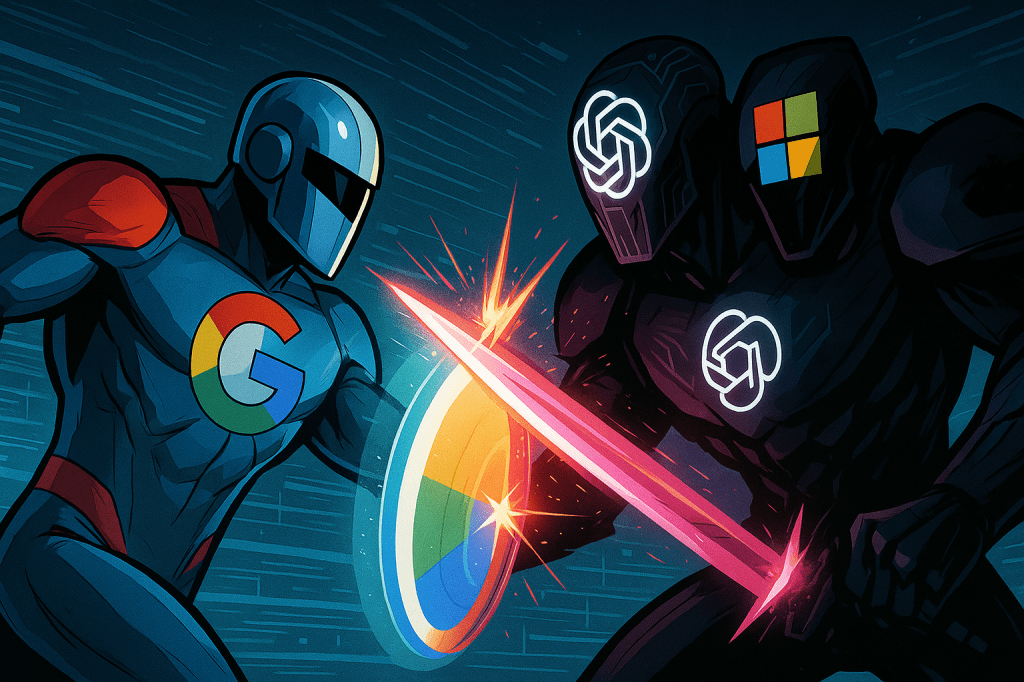

There are no signs of the ruthless pace of the generative AI update. In the last few weeks, Openai threw it Strong O3 and O4-Mini Indinig models together with GPT-4.1 seriesGoogle Twins 2.5 faced with Flash Its flagship is used rapidly in Gemini 2.5 Pro released shortly before. To navigate this dizzy landscape for enterprise technical leaders, the right AI platform needs to be visible rapidly rapidly changing model criteria

When capturing model-versus-model benches headlines, the decision of the technical leaders is deepening. Choosing the AI platform is an ecosystem that affects everything from basic calculation costs and agent development strategy and integration of the agent.

However, perhaps the hardest distinguishing of the surface, but deep-term effects, this AI is located in the hardware economy of the AI. Thanks to the mass silicone thanks to the mass silicone, Google, thanks to the mass silicone, the NVIDIA market-dominant (and high margin) is a potentially operating the AI business loads.

This analysis is an analyzes today, beyond criteria to compare Google and Openai / Microsoft AI ecosystems: economy, AI agents are a significant difference in strategies, model capabilities and strategies calculated in the enterprise and distribution realities. The analysis is set A deep video discussion exploring this system Between myself and AI developer Sam Witteveen in the early week.

The most important but often discussed, advantage of the advantage, Google Holds, its “Secret Weapon:” is a ten-year passenger in special driving processing units (TPUS). Openai and more extensive market NVIDIA rely on a strong, but expensive GPU (such as H100 and A100). Google, on the other hand, designs and places its TPU The recently launched iron tree generationCore AI for workload. This is here to train and serve twin models.

Why is this issue? This creates a great cost difference.

NVIDIA GPUS, rough margins evaluated by analysts Be in the range of 80% for Chips like Data Center H100 and the approaching B100 GPU. This means that Openai (via Microsoft Azure), “NVIDIA tax” – “NVIDIA tax” – computational power. Google, producing TPUs, makes this sign effectively bypass.

While producing GPUs, Hyperscalers such as 3,000-50 dollars, Microsoft (Openai supply) can pay $ 20,000 for a single unit of $ 20,000 + $ 35,000 according to for report. Industrial conversations and analysis shows Google, Google can get a high-level NVIDIA GPU with about 20% of the purchased. Emphasis is one, even if the exact numbers are internal 4x-6x cost efficiency Preference to Google to a unit that is suitable for a hardware level.

This structural advantage is reflected in the API prices. By comparing flagship models, Openai’s O3 About 8 times more expensive 4 times more expensive for signs and output verses of access Google’s twins than 2.5 Pro (For standard context length).

This cost is not a differential academic; Have deep strategic effects. Google can probably continue low prices and gives institutions to the more expected total cost of enterprises (TCO) and fully What does it do in practice right now.

Openai’s expenses include this time, NVIDIA price strength and the terms of the Azure Contract. Indeed, the calculation costs represent something calculated 55-60% of Openai’s General 9B Operating Expenses According to some information and there in 2024 projected for More than 80% in 2025O scalp. Although Openai’s projected income growth is astronomical, the potential of $ 125 billion by 2029 According to the internal forecast – It remains a critical problem to spend computed, To manage specific silicone traces.

Outside the supply, the two giant enterprises aspire to strategies for the establishment and placement of AI agents preparing to automate workflows.

Google interacts and gives an open impetus for more open ecosystems. In the cloud, the two weeks before, has opened Agent-to-agent (A2A) Protocol, agents developed (ADIC) and agents are designed to establish various platforms to communicate with agentspace hub to discover and manage. A2A Adoption Obstacles – Key players like anthropic (Intropebeat reached anthropicitude about it, but reduced anthropic comments) – and some developments are in the need for the existing model Context Protocol (MCP). Google’s intent is clear: Many sellers are potentially placed in an agent garden or rum drive Agent App Store.

Openai, on the contrary, seems aimed at creating strong consolidated agents in their stack, and the agents that use the instrument. The new O3 model is capable of doing hundreds of tool calls in a thought chain. Compilers, API and agents together with tools such as SDK’s new Codex Cli, along with the tools in Openai / AZURE, along with the means to build complex agents within the border border, use the SDK. When Microsoft offers some flexibility, Openai’s basic strategy, the cross-platform communication and the environment in the supervision of the supervisor, it is less about to maximize the ratio.

The unreasonable release period model management is frozen. Openai O3 is currently in Gemini 2.5 Pro, SWE-DENCORED BENCHMARKS, GEMINI 2.5 Pro or Gemini 2.5 Pro or Gemini and Aime. Gemini 2.5 Pro is also a common leader on the leadership board of the Great Language Model (LLM) Arena. To use the work for many enterprises, models reached a rough parity in basic facilities.

This real The difference differs in different trading-offes:

As a result, adoption is often a platform nests to the existing infrastructure and work flows of an enterprise.

Google’s capacity lies in deep integration for existing Google Cloud and work area customers. Gemini models, Vertex EU, Agenspace and BigsQuery offers a faster time-faster time for tools, single control aircraft, data management and companies Already invested in Google’s ecosystem. Google has actively leads large enterprisesDemonstrates places placed with firms such as Wendy’s, Wayfair and Wells Fargo.

Openai via Microsoft has an unparalleled market reaches and accessibility. Chatgept’s giant user base (~ 800m MAU) creates extensive dating. The more importantly, within the tools they use everyday, the potential includes strong AI Copilot and Azure services, Opeani models (most recent O-Series) within the potential hundreds of millions of enterprise users (Latest O-Series). For Standardized Organizations in Azure and Microsoft 365, Openai can be a more natural extension to receive. In addition, the extensive use of Openai APIs by developers means many enterprises proposals and work flows are already optimized for Openai models.

The Generative AI Platform War between Google and Openai / Microsoft came beyond the simple model comparisons. While both offering the most modern opportunities, they represent various strategic bets and provide different advantages and trade-trade for the enterprise.

Enterprises should be traded in the agent of the agent, compared to the practices of trade and enterprise integration and distribution, compared to different approaches and model opportunities against the context length.

However, suppressing all these factors, perhaps the most critical and a certain distinguishing, especially if Openai could not solve it quickly, is the harshest reality of the calculation calculation. The vertical integrated TPU strategy of Google, GPU prices that load GPU ~ 80% “NVIDIA tax”, fundamental economic advantage, which allow you to represent a potential game variable, “NVIDIA tax”

This is more than a small price difference; It affects everything from the affordability of API and the transparency of the AI placement from the long-term TCO. If the AI workload is exponentially, the platform with a more sustainable economic engine – is fueled by the effectiveness of hardware – a strong strategic edge. Google uses this advantage when pushing an open vision for the agent’s interaction.

Openai, supported by Microsoft, reaches the meters and an unparalleled market using a deep integrated instrument, although the questions remain on its value structure and model reliability.

To make the correct choice, enterprise technical leaders, former TCO effects, long-term TCO effects, agent strategy and openness, the power of raw thinking, existing technological stack and existing technological stacks and their special application for their tolerance.

SAM Witteveen’s and I see the video I break up: