Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

[ad_1]

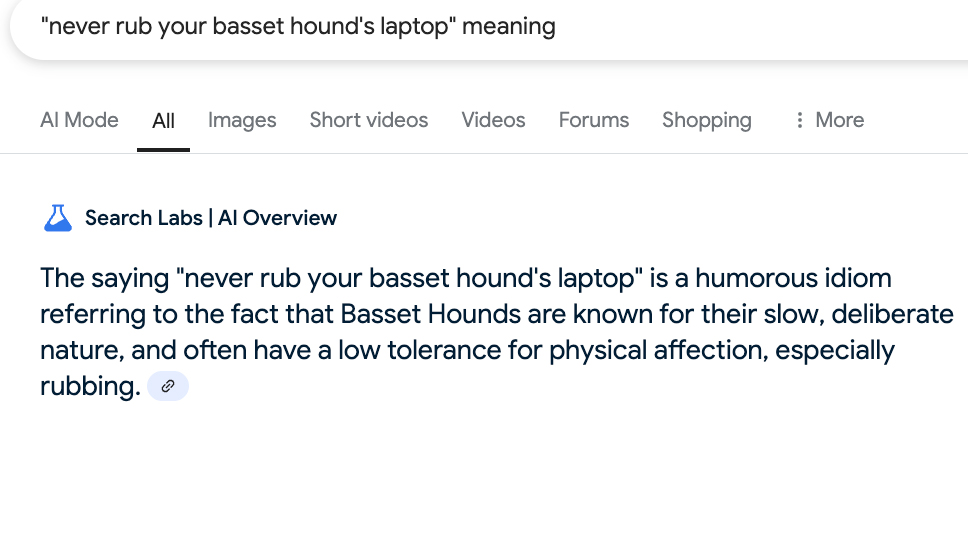

Big Technologically Poured countless dollars and resources aiIts Utopia is a reminder to promote the gospel, creating shine, and the balance of algorithms. Great time. Most recent evidence: You can pick the trick Google AI review (Automated answers at the top of your search queries) To explain the idiosis of idioms as if they are real.

According to Google’s AI review (through @EgregonBluesky), “You can’t lick bars twice,”, after deceiving, no second time you can deceive or deceive someone.

It sounds like a logical attempt to explain the idiom – if not just poppycock. The failure of Google’s working by Gemini has allowed the absurd Mumbo Jumbo, which is designed to deceive him, but the fact that it belongs to an expression established. In other words, AI Hallucinations still right and good.

We tied ourselves a little silliness and found similar results.

Google’s answer “You can’t golf without a fish without a fish” or claimed that you can’t play golf without the necessary equipment, played without a golf ball. Entertainment, AI looks, the golf ball could seem like a “fish” fish “.” Hmm.

Then there’s an older word, “You can’t open a peanut butter container with two left feet.” According to AI, this means you can’t do anything that requires skill or agility. Again, a noble knife, a noble knife in a determined work without actually step back.

There are more things. “You can’t get married with pizza” is a playful way to express the concept of marriage as an obligation of two people, not a nutrient. (Of course.) “The rope will not be a dead fish” means that something cannot be achieved only by force or effort; This requires a desire to be ready for or a natural progress. (Of course!) “Eat the first Chalupa’s first”, when you face a big problem or plenty of food, you must first start with the most important part or item. (Sage tips.)

This is the first example of AI hallucinations if the user can not be checked by the wrong information or real-life results. Just ask Chatgpt lawyersSteven Schwartz and Peter Loduca, who was Was fined $ 5,000 in 2023 To use Chatgept to make a client a brief investigation in the trial process. AI ChatBot is arising if the other party’s lawyers refer to the couple (very understandable), which cannot find (very understandable).

The couple’s response to the judge’s discipline? “We made a good mistake in the fact that a piece of a technology could not be made of the whole of the technology.”

[ad_2]

Source link